An Opinionated guide on how not to fail with fast.ai

You can find me on twitter @bhutanisanyam1

This post serves as a little guide to the newer fast.ai students. The MOOC’s third iteration goes live in Jan ‘19.

This post summarises the mistakes that I had made when getting started, hopefully, this will help you avoid making the same when doing fast.ai or even getting started with any Deep Learning Course.

If you’re from the fast.ai AI Community or are going to take a shot at the MOOC, these are all of the questions that I had asked repeatedly in the community:

How do I get started?I’m a beginner, Where should I begin?

I know the basics, which framework should I use?

Is Deep Learning Hard?

Is Machine Learning Hard?

Do I need to know Math?

I know X but Y seems hard, what do I do?

If you’re asking these questions, let me tell you how not do to fast.ai.

Below is a summary of all the wrong steps that I took:

Question: How do I get started?

Common Answer: Find some common “beginner-friendly” course on the internet, read Math, know statistics.

Me: Started with the Andrew Ng course, completed all of the assignments with a few peeking.

After: I have no clue what to do next! I see the cool practitioners building projects and sharing them on Twitter but I have no idea where to go next.

Suggestion: Try another beginner friendly Python MOOC.

Me: Completes another Python MOOC, only to realize I don’t know or I’m not proficient at the “ML Libraries” or the libraries that appear to be highly used.

Suggestion: Find a MOOC which teaches Numpy, Pandas, Scipy and gets back to reality again.

Question: Okay, Now I know how to read a CSV using Pandas. What Framework should I use to do “Deep Learning”?

Approach: Joins another “Beginner friendly” course for Tensorflow.

Scrolling through Twitter discovers that Generative Adversarial Network implementations in something called PyTorch.

Considers finding another course in PyTorch.

Question: So I know how to import the library and create a 3 layer NN, how do I learn to build a Project?

Approach: Find another MOOC.

Status: By now, I’ve seen the three-layer NN image too-many-times. I’ve to go through the same set of introductions and end up with the same realization after “completing the MOOC”.

Yes, I know how it works on an abstract level. I have no idea, what to do with this knowledge or where to use it or how to build something new, different.

Realization: (Personal) Deep Learning and Machine learning are very hard.

To do Machine Learning, I need to be able to read papers when I wake up, while I’m having coffee and when in the washroom.

I need to be able to breathe in equations and magically a jupyter notebook would appear in my head-having all of the Python Code.

Clearly, I can’t do this-ML is impossible, which is why people make so much money. I need a Masters or Ph.D. to be able to completely understand it.

Stop right there. If you’re worried about the above realization or if it even remotely echoes with your thought process, let me tell you - the realization is INCORRECT!

The above is thanks to the mistakes I’ve made when getting started or while wandering on my “Machine Learning Path”

Question: Okay, What is the right way?

- The right MOOC is the one you sign up for first.

- The second MOOC that you take (for the same process) is a mistake.

- Focus on getting clear with the ground basics then get started with a Toy Project or Code.

- Code, Code Code!

- Being a CS Student, Math has never been my weaker point. Even during the Flying Car Udacity NanoDegree path that I had taken, Math wasn’t the challenge for me.

Personally, I have realized that code has always been my bottleneck.

I decided to sign up for Fast.ai and I really fell in Love with Jeremy Howard’s teaching style.

But then I fell back into the trap of Learning More.

I realized yes, I can understand a few things better-let’s sign up for CS231n or CS224n.

Okay-I heard about Image Segmentation-that sounds really cool. Hmm, Fast.ai Part 1 doesn’t cover it-Maybe Andrej Karpathy’s Lectures will help.

Let me tell you - WATCHING THE LECTURES DOES NOT EQUATE TO DOING MACHINE LEARNING.

Doing implies writing a line of code. It’s okay, doesn’t have to be smart. You could even get started by writing the same code taught in a lecture.

Fast.ai teaches takes an approach called: Top Down.

However, I was from the Traditional Bottom-Up approach where I was taught the bigger picture comes later, you have to do Matrix Multiplication first, that image classifier can wait.

Here’s the thing: Many of us are lifelong learners and it is a common misbelief that “watching” more course lectures and banging your head against more Math equations will help me understand the field. This is because anyone who is learning Machine Learning is because of their curiosity-No school teaches cutting-edge ML as part of their curriculum. So watching and reading more content seems to be a good substitute.

The Bigger picture

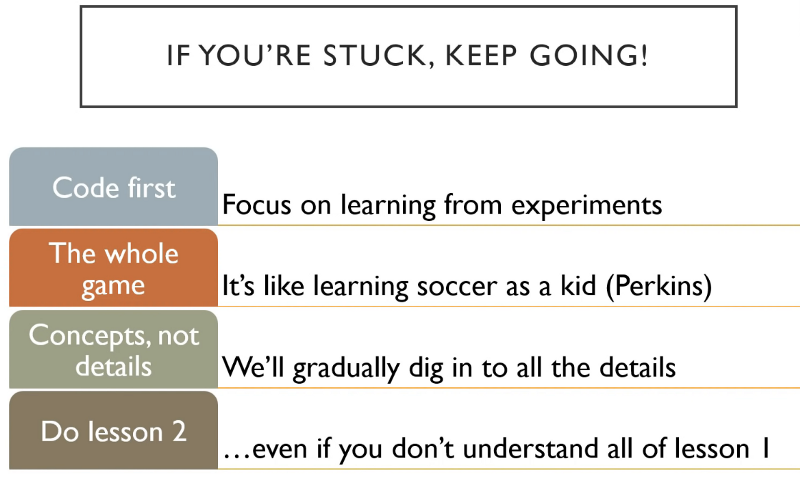

Fast.ai teaches you how to build something first and only takes you into the machinery behind the curtains later-which is okay.

I learned that yes, I can build a SOTA Image classifier, although I used a library built by very smart people and I probably cannot do anything without it right now, but yes it's possible!

Okay, Now I know the bigger picture.

I think it’s time to pull back the curtains and go backstage?

NO!

This is the mistake I had made with the course. The course takes an approach where it teaches you a cutting-edge research+Practitioner led field in a way that a young kid is taught Sports.

Of course, if it were baseball, you’d be happy to not know the physics behind the curveball. But since everyone on Twitter seems so smart or the forums is completely filled with smart people doing amazing cool projects-maybe a bit of theory would help?

NO!

Opinionated best approaches to doing Fast.ai, Top Down Learning

- Watch the lecture just once.

- Its okay, you won’t understand everything, you don’t have to either.

Go out, read through the nb, shift+Enter once and nod once to all the cells. - Ctrl+W, open up a fresh notebook and try to replicate the steps that Jeremy had covered.

It’s okay-you won’t be able to at first that's normal. Cheat once or twice but then again get back to your notebook. - This might seem easy but it’s very hard.

Once you’re able to do this, find a similar dataset.

Jeremy used Dogs Vs Cats, give Pandas Vs Bears a shot! - Code as much as you can and pick up the basics of numpy and pandas along the way. It’s okay not to know everything at first. You weren’t born a practitioner!

- Its okay to be overwhelmed-people from all sorts of domain expertise apply their knowledge to the field. You might be an undergrad with just a few internships’ worths of (my case) exposure to Software dev.

Understand that a person with 4 years of Coding experience (Experience = Real world/Job Exposure) who takes 1 week to build a project, knows much more than you already do. - It’s okay to not know everything. It took me a little while to realize I cannot learn everything at a super fast pace, even though I wake up at 4.

In my interview series, Dr. Ian Goodfellow himself mentioned in the context that ML is a very fast growing field- that he, creator of GAN(s) himself does not try to keep up with all the developments in GAN!

Machine Learning moves very fast, its okay if you’re not reading every paper that comes out (it’s almost impossible in my opinion).

Link to the complete interviewSanyam Bhutani: Given the explosive growth rates in research, How do you stay up to date with the cutting edge?

Dr. Ian Goodfellow: Not very long ago I followed almost everything in deep learning, especially while I was writing the textbook. Today that does not seem feasible, and I really only follow topics that are clearly relevant to my own research. I don’t even know everything that is going on with GANs.

Let me tell you, even if you spend all of your days trying to digest the papers flowing in the ML & DL community and chuck out jupyter notebooks (The general assumption of how practitioners live: They read a paper and then they type away the code-voila!), you’d still have a hard time keeping up.

You need to dedicate your time carefully. I say this because unlike me, many do not have the privilege of being a full-time student.

- One Perfect Project>>Many “good” projects.

Jeremy has advice-showcasing once perfect project as always better than having a few incompletely done projects. Christine McLeavey Payne, an OpenAI scholar, a fast.ai fellow has implemented this approach with her Project: Clara

Link to the Complete InterviewSanyam Bhutani: For the readers and the beginners who are interested in working on Deep Learning and dream of joining the OpenAI Scholars program. What would be your best advice?

Christine McLeavey: I think now is such an exciting time to begin this journey. There are so many opportunities (at OpenAI, but also more broadly), and everything you need to learn is available online for free or next to free.

In terms of specifics — one of my favorite pieces of advice was from FastAI’s Jeremy Howard. He told me to focus on making one really great project that showed off my skills. He says that so many people have many interesting but half-finished projects on github, and that it’s much better to have a single one that is really polished.

How do we get used to the Top Down approach?

Honestly, I find it very hard. If you’re good at it, like Kaggle Master: Radek, you can easily go ahead and win Kaggle Competitions.

So, how do you get used to the Top-Down approach?

Link to the Complete InterviewSanyam Bhutani: [..] Most of us have been taught in a bottom up manner our entire student life, How can we adapt better to the “Top Down” approach?

Dr. Rachel Thomas: This is a good question! [..] many students lose motivation or drop out along the way. In contrast, areas like sports or music are often taught in a “top-down” way in which a child can enjoy playing baseball, even if they don’t know many of the formal rules. Children playing baseball have a general sense of the “whole game”, and learn the details later, over time. We use this top-down approach at fast.ai to get people using deep learning to solve problems right away, and then we teach about the underlying details later as time goes on. Our approach was inspired by Harvard professor David Perkins and mathematician Paul Lockhart.

I still find myself defaulting into a “bottom-up” approach sometimes, because it’s such a habit after 2 decades of traditional schooling. Using something when we do don’t understand the underlying details can feel uncomfortable, and I think the key is to just accept that discomfort and do it anyway.

Few Things that I‘m trying:

- Kaggle Competitions

- Reading the Source Code of the Library: Please note that I’m doing this after watching all the lectures about twice (Thrice for a few ones)

- Reading the Docs

- Code, Code, Code.

To anyone else who feels overwhelmed by Machine Learning, let me tell you no one can read those 50 lined differential equations, blink their eyes and magically produce elegant looking code. I cannot, definitely and I haven’t met anyone who can effortlessly.

Machine Learning is a fast-moving field, the SOTA of 2017 would now already be outdated for a few cases.

Also, let me tell you-It indeed is an overwhelming field. Becoming a great practitioner is hard, it will require a lot of patience and practice.

Yes, becoming a Kaggle Grandmaster is my lifetime dream. But let me tell you, I break struggle to get my Nvidia drivers to work on days or often struggle with getting an acceptable accuracy after working a problem for 3 days, on something that Jeremy absolutely killed the SOTA in just a 2-hour lecture.

My only advice:

- Fire up a jupyter notebook

- Work on small problems every day.

- Improve the model a little each day and learn something new until you find yourself being overwhelmed by a newer idea.

- Referring to Jeremy’s slides from the upcoming MOOC:

The real world analogy to this is:

Picture this:

Your car breaks down on the highway, do you fiddle-around with what you know or buy a book to understand the Thermodynamics of your Car Engine?

A few disclaimers:

- I’m not against Math, but it has never been my bottle-neck. It’s always been code.

- Eventually, you will have to start reading papers-starting by ML by reading papers before you know the basics is a bad idea

- Doing More Courses isn’t bad idea-but please master one before you move to the next.

- Personally, I’m a fan of fast.ai and it’s the only course I’d recommend.

For an opinionated post on “How to do fast.ai”, stay Tuned!

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science