You can find me on Twitter @bhutanisanyam1

The MIT Deep Learning for Self-Driving Cars course just released their First lecture video (alternatively Here are the lecture notes if you want a quick read)

The Lecture is an overview of Deep Learning techniques and has some discussions on the future of Self Driving Tech as well, and a brief warning about the Gaps of current systems.

Here is the take on how far away are we from an Autonomously Driven Future and a brief touch on Ethics:

By 2017, Computer Vision has reached 97.7%+ accuracies! (ImageNet challenge) Amazing isn’t it?

So how far are we from a fully autonomous World?

97.7% sounds good enough. Is it?

After all driving involves a lot of Computer Vision and it is indeed better than human high scores-so are we close?

The ImageNet Challenge involves classifying 14M images into one of 22,000 possible classes.

How good is this accuracy when its extrapolated to the real world?

Now yes, the classes involved in the challenge wouldn’t all be involved in the SDC scenario but they do point out to one thing, Computer Vision, although it’s more accurate than Humans now, is still not perfect. It isn’t a 100% accurate.

That coupled with the dynamics of the real world suggest that there is a small chance of the Autonomous systems behaving in unexpected ways. Would you trust the system completely in under all scenarios? To handle every situation better than a Human Driver?

The argument made in the lecture is that SDCs as of now will work as tools that would help us drive better. They might even drive better than us, but at points, Humans would need to intervene. We have a Semi-Autonomous year as of 2018.

Roughly, in 90% of the Cases, the Car will drive itself better than us, but for the remainder of 10% cases, Human intervention/control would be required.

A 100% accuracy would have a universal approval, which would require a generalisation over all the unexpected cases too, for example: A situation of 1 in a Million, where a Deer would cross the road and the situation has to be handled.

Lex Fridman argues in the Lecture that the ‘perfect system’ would require a lot more research efforts and increase in Efficiency of Deep learning algorithms. Which as of now are highly inefficient as well (Computationally speaking).

By the perfect case- I’m not referring to the case that a car that can drive itself. The perfect case is where, we’d be so confident about the systems that we would no longer have steering wheels in our vehicles. That human driving would be considered more dangerous than the automated one.

Till then SDCs would definitely appear on the road, we might not have to hold the steering wheels for long durations, no doubt. But there will definitely be moments when Human control would be required. Hence, the term Semi-Autonomous.

A brief mention of Ethics with Respect to Reinforcement learning is done:

Qouting an example of Reinforcement Learning- Reinforcement Learning involves set of algorithms where the AI (agent) learns itself to maximise defined goals such that a Maximum Reward is achieved. Here is a Primer for an abstract overview.

Many Times, the System (agent to be Term Specific) behaves in ways that are completely unexpected. (which are better at getting results).

The example of Coast runners from Lecture: where You and I’d probably play to race and collect Green Circles. The Agent figures Discovers local pockets of high reward ignoring the “implied” bigger picture goal of finishing the race.

The Cutting Edge AlphaGo and AlphaGo Zero Systems have already proved this, by performing moves in the Game of Go that were surprising to Human experts.

So What if we want to go from A to B in the fastest manner, and The SDC decides to take an approach/path that isn’t expected? (I understand that the Traffic rules are well coded into the core systems-but that doesn’t allow us to overlook the possibility)

Given the outcomes can be unexpected, we would definitely need to keep a check on the system.

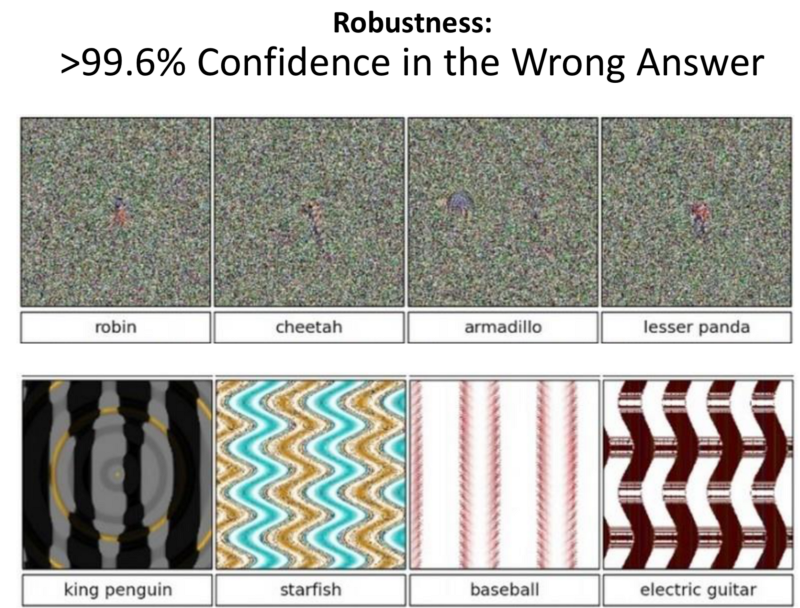

Also the robustness of the Vision Systems is questionable. Here is an example of how adding a little distortion to the image, easily fools the State of the art ImageNet-Winning models.

So, Finally: Are SDCs here? With Voyage Deploying Self Driving Taxis in Villages; GM Motors testing their massive production vehicles?

Yes and No. Yes, we have Semi-Autonomous vehicles around us. But A fully Autonmous world is still a little away. One where the cars would not have a Steering wheel at all. A few years- maybe a few decades.

We have a Semi-Autonomous Present (or Future) that is starting to come into shape.

I believe as of now, the SDCs will work well in ways such as the Guardian Mode as showcased by Toyota.

The Machine takes control at points where a human might not be able to act promptly, for example: When the vehicle in front of your crashes and a decision needs to be made in a mili-second. Or in bad weather conditions where the vehicle can ‘see’ better than humans, thanks to the Sensors (RADAR in this case) on board.

On the other hand, when the situations are complicated — The Driver would take control.

On highways, you could turn on the AutoPilot, read a newspaper, play a game. But during complicated situations, a human control would be required over the Autopilot systems.

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science