Part 4 of The series where I interview my heroes.

You can find me on twitter @bhutanisanyam1

This is a very special version of the series.

Today, I’m talking to The Twice grandmaster:

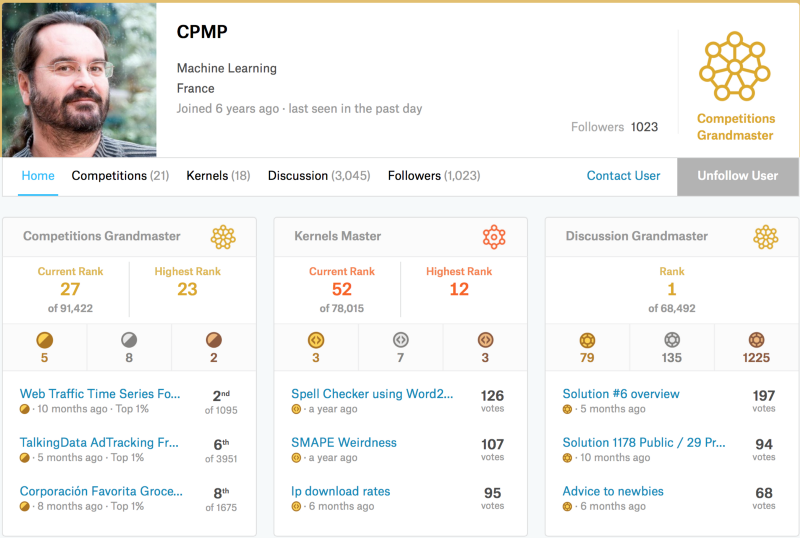

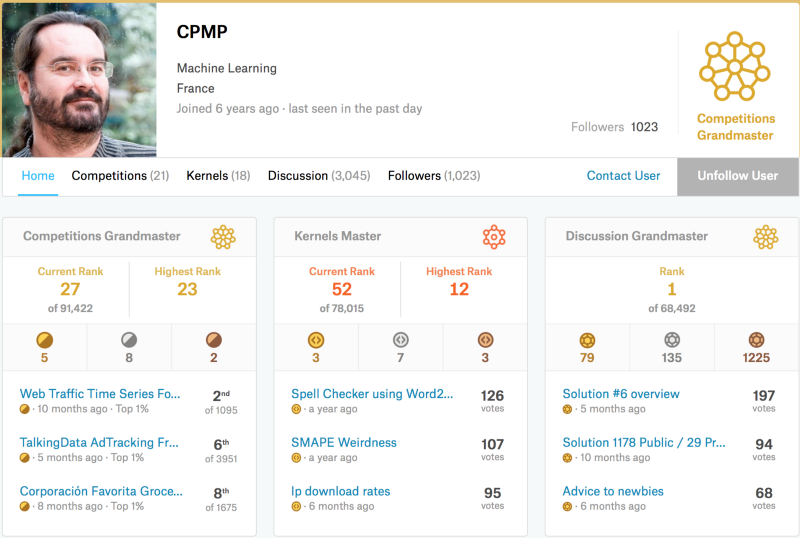

THE Kaggle Discussion grandmaster (Ranked #1), Competition Grandmaster (Ranked #27) and also Kernels Master: Dr. Jean-Francois Puget (kaggle: CPMP).

About the Series:

I have very recently started making some progress with my Self-Taught Machine Learning Journey. But to be honest, it wouldn’t be possible at all without the amazing community online and the great people that have helped me. In this Series of Blog Posts, I talk with People that have really inspired me.

The motivation behind doing this is, you might see some patterns and hopefully you’d be able to learn from the amazing people that I have had the chance of learning from.

Sanyam Bhutani: Hello Grandmaster, Thank you for taking the time to do this.

Dr. Jean-Francois Puget: Thank you for inviting me.

Sanyam Bhutani: Could you tell the readers about your kaggle journey? How you got started and got addicted to this “legal drug”?

Dr. Jean-Francois Puget: I started my professional life with a Ph.D. in machine learning, just to say how important this field is for me. Then I moved to a startup called ILOG to work in a different area. Fast forward, ILOG got acquired by IBM, and I moved back to machine learning. ML had evolved a lot since my Ph.D., and I realised I needed a refresher. I took some online course like Andrew Ng Stanford ML course on Coursera, read quite a bit, but this was not enough. I needed to get up to date on state of the art ML practice, and Kaggle looked like the right place. After having watched Kaggle for a while, I decided to put my toes in the water and started competing in May 2016. I got hooked immediately!

Sanyam Bhutani: You’re the Technical leader for IBM Machine Learning and Optimization offerings. How do you find time for kaggle? Are the kaggle competitions related to the projects at IBM?

Dr. Jean-Francois Puget: I don’t think you can build tools if you don’t know how they are used. That’s why it is important to practice machine learning and data science if you work on tools for machine learning practitioners. Therefore, participating in Kaggle competitions is really useful for my job. This said I also spend quite a lot of my personal time on Kaggle competitions, during evenings, weekends, or vacations.

Sanyam Bhutani: When you started to kaggle in 2016, you already were an expert in the ML field. Did Kaggle live up to its promise of being challenging?

Dr. Jean-Francois Puget: Kaggle proved to be way more competitive than I would have imagined. People who don’t enter Kaggle competitions have no idea of how elaborate and advanced winning solutions are.

Sanyam Bhutani: You’ve had amazing results on kaggle over the past few years. What was your favourite challenge?

For the readers, here is a little snap from Dr. Puget’s kaggle profile.

Dr. Jean-Francois Puget: Of course I love the ones I fared well. The one I am most proud of is the recent Talking data competition where I finished 6th alone, ahead of many grandmaster teams. Only 3 people finished with a gold medal in that competition. But the one I enjoyed the most is the 2Sigma New York Apartment Rental, because it had a mix of natural language data and structured data, with lots of room for feature engineering. I only worked 12 days on it, but these were very intense. I recommend this competition to people who want to exercise their feature engineering skills.

Sanyam Bhutani: What kind of challenges do you look for today? How do you decide if a competition is worth your time?

Dr. Jean-Francois Puget: I am now selecting competitions primarily to learn about a domain I don’t master. For instance, I am entering the TGS Salt detection competition to learn more about image segmentation.

Sanyam Bhutani: How do you tackle a new competition? What are your go to techniques?

Dr. Jean-Francois Puget: First step is to quickly get a baseline and a submission. This is to clear any bug or any basic misunderstanding.

The Second and most important step is to establish a reliable local validation setting. The Goal is to be able to evaluate if a model is better than another model with training data, instead of relying on the public leaderboard score. If you manage to get this, then you can perform as many experiments you want, and you are not bound by the 5 submissions a day limit. Submissions are only used to check that your local validation is reliable, i.e. that when your local score improves then your LB score also improves. The Basic tool for that is cross validation. Mastering cross validation, and how to define folds is a key skill. Make sure you understand when you can use a random fold split, or when you must use some stratification or some time based fold definitions.

The Third step is to really understand the metric, and how to approximate it via loss functions. Sometimes it is easy, for instance using mean squared error (mse) if the metric is square root mse (rmse). Sometimes it is tricky when the metric is not differentiable, for instance when the metric is roc-auc for binary classification, or intersection over union for image segmentation.

Then comes feature engineering, NN architecture choice, hyper parameter optimization, etc. Jumping to this before the preparation steps above is a loss of time.

Sanyam Bhutani: For the readers and noobs like me who want to become better kagglers, what would be your best advice?

Dr. Jean-Francois Puget: Read write ups of top teams after each competition ends. It is better if you entered the competition, as you will get more from the write up, but reading all write ups is useful IMHO. That’s when you learn the most on Kaggle.

Also, get a decent computing resource, either with on premise machines, or cloud services. Kaggle competitions require more and more computing resources as time goes by. This is a general trend in IT industry anyway.

Last, try hard on your side before looking at the shared material. Reusing shared material, especially kernels, is fine if you don’t use them as a black box. Play with what you want to reuse, and modify it to leverage your local validation setting. For instance, lots of shared kernels have no cross validation; you should add it in that case.

Sanyam Bhutani: For the readers who want to take up Machine Learning as a Career path, Do you feel a good kaggle profile and (kaggle) experience is helpful?

Dr. Jean-Francois Puget: Certainly useful. I am getting interesting job offers now that I am a grandmaster. Kaggle is very visible. But one should not overestimate it either. The skills you learn at Kaggle are very useful, but other skills are also required in the real world. Ability to deal with business stakeholders, ability to gather relevant data, are key skills that are not tested on Kaggle. Indeed Kaggle competitions come with a well defined business problem and with relevant data.

Sanyam Bhutani: Given the explosive growth rate of ML, How do you stay up to date with the State of the Art Techniques?

Dr. Jean-Francois Puget: I practice, on Kaggle, and also on IBM related ML projects. I also read scientific papers. A good place to get alerts on the interesting material is the KaggleNoobs slack team.

Sanyam Bhutani: What progress are you really excited about in Machine Learning?

Dr. Jean-Francois Puget: I think that gradient boosted machines (GBMs) like XGBost or LightGBM are the most important development of the last 5 years. Most people would say deep learning. I agree that deep learning is extremely important and exciting, but GBMs are more actionable in the industry right now, as they can be used to replace most predictive models built so far.

Sanyam Bhutani: Do you feel ML as a field will live up to the hype?

Dr. Jean-Francois Puget: The hype has moved away from ML to DL in recent years, and now to AI. Sure the three domains are closely related, but ML is no longer what makes headlines. This said I don’t think ML/DL/AI will live up to the current hype. While there are true advances, they are oversold and overgeneralized. There will be a dramatic backslash on AI and Deep Learning soon, with yet another AI winter after that.

Sanyam Bhutani: Before we conclude, any tips for the beginners who feel overwhelmed to start competing?

Dr. Jean-Francois Puget: Don’t be shy, just try it. Use a pseudo instead of your real name, so that you have no fear of damaging your reputation. But be prepared to have your ego hurt because Kaggle is very competitive ;) And use any setback to learn how to do better next time.

Sanyam Bhutani: Thank you so much for doing this interview.

Kaggle Noobs is the best community for kaggle where you can find Dr. Puget, Other Kaggle Grandmasters, Masters, Experts and it’s a community where even noobs like me are welcome.Come join if you’re interested in ML/DL/Kaggle.

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science