Part 10 of The series where I interview my heroes.

Index and about the series“Interviews with ML Heroes”

You can find me on twitter @bhutanisanyam1

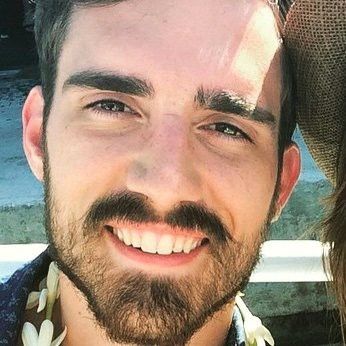

Today, I’m honored and super excited to be talking with not just my hero, but a hero and role model to the complete OpenMined Organisation, its leader: Andrew Trask.

Andrew is a Ph.D. student at Oxford University, he’s also the author of the amazing book: Grokking Deep Learning, if you’ve taken the Udacity Deep Learning Nanodegree, you would definitely remember his awesome lectures in the NLP section.

About the Series:

I have very recently started making some progress with my Self-Taught Machine Learning Journey. But to be honest, it wouldn’t be possible at all without the amazing community online and the great people that have helped me.

In this Series of Blog Posts, I talk with People that have really inspired me and whom I look up to as my role-models.

The motivation behind doing this is, you might see some patterns and hopefully you’d be able to learn from the amazing people that I have had the chance of learning from.

Sanyam Bhutani: Hello Andrew, Thank you so much for doing this interview.

Andrew Trask: My pleasure — thank you for having me.

Sanyam Bhutani: Today you’re a Ph.D. student at Oxford. You’re also the pioneer and leader of OpenMined.

Could you tell the readers about how you got started? What got you interested in Deep Learning?

Andrew Trask: I originally got started in Deep Learning back in undergrad when I took an AI course as a part of my degree. I absolutely fell in love with it and with neural networks in particular (which was fortunate). At the time I was also majoring in Finance and was interested in seeing if neural networks could accurately model the economy (and thus predict stock prices). It became a major passion of mine. My dad helped me buy some second-hand servers which I set up in my apartment and used for scraping Twitter. My wife still teases me about the noise and heat they produced — my air conditioning broke like 4 times! I also ran out of space. They were stacked floor to ceiling in my apartment laundry room, inside the furniture (running), and under my bed. It was a lot of fun.

Sanyam Bhutani: You’re leading a major community of OpenMined while working on your research. You’ve also been writing your Deep Learning book during the past year.

How do you manage your time so effectively? Why are these contributions important to you?

Andrew Trask: Ha! I’m still working on that to be honest. The best advice I can give there is to try to find activities where you get synergies of scope. For me that’s a focus on the intersection of cryptography and deep learning. The book, OpenMined, and my research all focus on it, so when I’m working on one I’m also (more or less) working on the rest as well. It doesn’t always work out, but its easier than if all three were totally separate.

Sanyam Bhutani: What is being a student at Oxford like?

How would you describe a day in your life?

Andrew Trask: Oxford PhD programs are extremely open ended, which is exactly what I was looking for. I thrive in an environment where I have very little direction (lots of freedom to explore). On most days, I wake up on my narrowboat (where my wife and I live), share breakfast with my wife, and then start doing a mixture of coding/emails/writing depending on what’s needed at the time. I try to get outside and take a walk (we live in the country outside of Oxford) in the afternoon, especially to rest my eyes (they get quite strained looking at a computer screen all day). I also recently installed a projector on our boat, so in the evenings my wife and I like to watch our favorite shows or maybe a movie.

On Mondays and Fridays, however, I take the morning train to London where I work for the day (mostly meetings). It’s a long commute (2 hours each way), but totally worth it.

Sanyam Bhutani: You’re an advocate of Decentralised AI.

Why do you feel it’s important at such an early stage — even when the AI systems aren’t very production ready yet?

Andrew Trask: AI is like any other industry. There’s a supply chain. For AI, it starts with people who create data (normal people like you and me), which is then collected by various applications, aggregated through data markets, refined into AI models, and then sold in the form of either direct insights or models themselves. At the moment, everyday people only really control the very first piece of that value chain. The decentralization/privacy movement is about creating technology which allows everyday people to have ownership/control over a larger portion of that chain. Over time, this increases the chances that the entire system creates value for everyday people, that it works in their interests. Furthermore, the longer one waits to re-direct ownership of a value chain, the more difficult it is to move. To that end, the sooner the better.

Sanyam Bhutani: Apart from Decentralised AI, what do you feel is another aspect that the practitioners must pay attention to?

Andrew Trask: Security and performance is the most frequently overlooked limitation — also a realistic end user story. Many people make the mistake of starting with a “sexy idea” and then working backward to the customer. We need to start with customer needs and then figure out how decentralization can meet those needs better than centralization.

Sanyam Bhutani: For the readers and the beginners who are interested in working on Deep Learning. What would be your best advice?

Andrew Trask: I give this advice all the time — and it undoubtedly helped me more than any other single activity — but people rarely take advantage of it because it’s time consuming. The secret to getting into the deep learning community is high quality blogging. Read 5 different blog posts about the same subject and then try to synthesize your own view. Don’t just write something ok, either — take 3 or 4 full days on a post and try to make it as short and simple (yet complete) as possible. Re-write it multiple times. As you write and re-write and think about the topic you’re trying to teach, you will come to understand it. Furthermore, other people will come to understand it as well (and they’ll understand that you understand it, which helps you get a job). Most folks want someone to hold their hand through the process, but the best way to learn is to write a blogpost that holds someone else’s hand, step by step (with toy code examples!). Also, when you do code examples, don’t write some big object-oriented mess. Fewer lines the better. Make it a script. Only use numpy. Teach what each line does. Rinse and repeat. When you feel comfortable enough you’ll then be able to do this with recently published papers — which is when you really know you’re making progress!

Sanyam Bhutani: The OpenMined community is building some great tools.

Do you believe open source communities can be as important to AI as a Tech Giant Corporation?

Andrew Trask: Of course! — and many of the best open source communities go hand in hand with large corporations (Tensorflow, PyTorch, and Keras to name a few).

Sanyam Bhutani: Given the explosive growth rates in research, How do you stay up to date with the cutting edge?

Andrew Trask: I follow a good set of folks on Twitter. Even though I’m embedded in and around great co-workers who are “in the know” about new research, I still usually find out about papers first from my twitter feed.

Sanyam Bhutani: Do you feel Machine Learning has been overhyped?

Andrew Trask: Nope — but privacy has definitely been underhyped. In my opinion, solving privacy is not only how we promote “safe” machine laerning, it’s actually key to getting to AGI. Not many people agree with me on this yet, but I am quite confident none-the-less.

Sanyam Bhutani: Do you feel a Ph.D. or Masters level of expertise is necessary or one can contribute to the field of Deep Learning without being an “expert”?

Andrew Trask: I published my first paper at ICML as an undergrad. Everything I needed to know to do that I learned by reading, teaching, and talking with others about the concepts. The real secret is finding a position which lets you self-study full time. School can be great for this if you strategize appropriately (find classes where you can do deep learning for your projects).

Sanyam Bhutani: Before we conclude, any advice for the beginners who even though are excited about the field, feel overwhelmed to even get started with Deep Learning?

Andrew Trask: I began my journey into Deep Learning 6 years ago. It’s not that long. Deep Learning isn’t like Physics where it takes a whole career to get to the bleeding edge. It’s a relatively new field and it moves quite quickly which means that it’s a bit biased toward new folks coming into the field who have the time to keep up with what’s going on. Also, once you really understand backpropagation, stochastic gradient descent, convolutions, attention, and LSTMs you’ve got most of the big parts down (you know enough to work professionally in deep learning). Many other advances are merely modifications, permutations, or extensions of these concepts.

Sanyam Bhutani: Thank you so much for doing this interview.

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science