You can find me on twitter @bhutanisanyam1

We must admit the concept of using pretrained Models in NLP is admitedly new.

In this post I share a method taught in the v2 of FastAI course (to be released publically by next year): to train a Language model on the Large Movie View Dataset which contains 50,000 reviews from IMDB, so that gives us a decent amount of data to test and train our models on, and then use the same model to perform sentiment analysis on IMDB Reviews.

Language Modelling

Creating a model that is used to predict/produce a language or to simply predict the next word in a language based on the current set of words.

Sentiment Analysis

Analysing a given set of words to predict the sentiment in the paragraph.

IMDB Large Movie Dataset

- The dataset has a huge number of 50,000 reviews

- All of these reviews are in English, polarised labelled reviews

Below is a walkthrough of the keysteps in our experiment.

Library used: PyTorch, FastAI

Why use a pretrained Model?

- The accuracy achieved is better than a traditional approach

- Fine tuning a Model is powerful

Pretraining

In essence we would be using a pretrained network, but here we shall create the same on our own.

We preprocess our data using PyTorch’s Torchtext library

TEXT = data.Field(lower=True, tokenize=spacy_tok)We tokenize our data with spacy and keep it in the lower case.

Next, we create our Model data, which will be fed to the Learning model to perform language modelling.

md = LanguageModelData(PATH, TEXT, **FILES, bs=64, bptt=70, min_freq=10)- Path points to the path of our datasets.

- Text contains the preprocessed data.

- FILES is a dictionary of our dataset.

- bs mentions the batchsize

- bptt: the number of words we will backpropagate through.

- min_freq: words having a frequency lesser than this are kept uncategorized

Since we know that Neural Networks can’t really work with words, we need to map the words to integers. Torch text already does this by mapping our words in

TEXT.vocab Training

Next up, we create a learner object and call the fit function for the same.

learner = md.get_model(opt_fn, em_sz, nh, nl,dropouti=0.05, dropout=0.05, wdrop=0.1, dropoute=0.02, dropouth=0.05)

learner.fit(3e-3, 4, wds=1e-6, cycle_len=1, cycle_mult=2)- opt_fn: Optimizer function, Fast AI library uses the AWD LSTM Model which is really good at regularization by ising dropout.

- We pass the embedding size

- nh: Number of hidden layers

- nn: Number of Layers in our NN.

- We set the Learning rate, cycle length and other parameters in our fit function.

Embedding matrix: Here is link to a gentle introduction Embeddings.

The Language Model

Here is a sample of text produced by the trained model

. So, it wasn’t quite was I was expecting, but I really liked it anyway! The bestperformance was the one in the movie where he was a little too old for the part . i think he was a good actor , but he was nt that good .the movie was a bit slow , but it was n’t too bad . the acting …So, thus far we have created a model that can successfully create movie reviews, which started out as being a model that didn’t even understand english. Next we Finetune this to our target task.

Finetuning

So far, we have trained our Model nicely on Language Modelling. Now we use the same to predict Sentiments of Movie Reviews.

We preload our model.

model.freeze_to(-1)

model.fit(lr, 1, metrics=[accuracy])

model.unfreeze()

model.fit(lr, 1, metrics=[accuracy], cycle_len=1)We freeze the model till the last layer, fit the same after setting our learning rate. We define our metrics for accuracy.

Performance

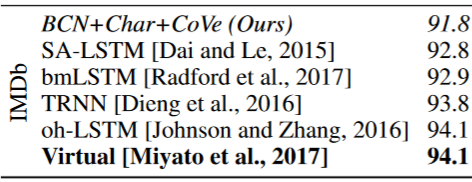

Learned in translation: contextualized word vectors is a paper that has a comparision of all the cutting edge model’s performance on IMDB dataset as a benchmark comparision.

After Finetuning the learning rates, tweaking the cycle lengths the accuracy achieved by the model is

0.9451121794871795294.51 !

Conclusion

We started with a model that was decent in producing IMBD movie reviews.

The state of the art of 2017 research is 94.1. So the idea of applying a pretrained language model to actually outperformed the cutting edge research in academia as well.

I’m personally working with my college to generate a model that analysis the sentiment in the Faculty reviews submitted by Students.

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science