A write-up of my first kaggle competition experience

You can find me on twitter @bhutanisanyam1

During my Initial planning on My Self-Taught Machine Learning journey this year, I had pledged to make into Top 25% for any 2 (Live) Kaggle competitions.

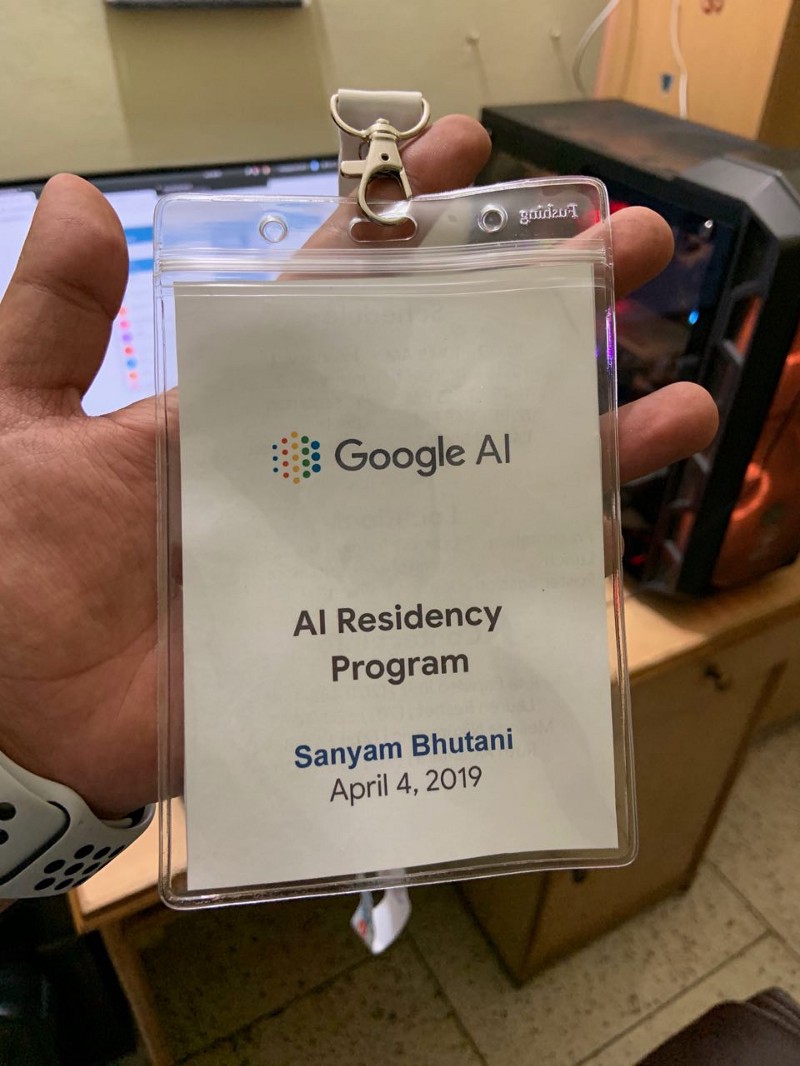

This is a write up of how Team “rm-rf /” made it to the Top 30% in our First kaggle competition ever: The “Quick, Draw! Doodle Recognition Challenge” by Google AI Team, hosted on kaggle.

Special Mention: Team “rm-rf /” was a two-member team consisting of my Business partner and friend Rishi Bhalodia and myself.

Experience

Picture this: A race that goes on for three months. There is no finish line, there are high-scores. You’re a seasoned runner in your school.

The catch?

You’re against people speeding on SuperCars (GrandMasters with a LOT of experience) while I was running barefoot. Sure, I was pretty good against my friends, but against a car?

In a sense, it was like a PUBG game where we just free-fall into the middle of the fight and grabbed onto any gun we could, tried to make it to the “safe zone”.

The Safe Zone for us was the medal area.

So why is everyone so addicted to Kaggle?

Personally, I learned much more in the 1 month of competing than any 1 month MOOC that I have ever taken up.

The “Experts” on kaggle are always generous with their ideas. We were surprised by the fact that many people would almost give out their complete solutions-being on the Top LB positions!

Every night we’d make a good submission, the next day you wake up and you would have fallen by 20 ranks on the LB! Hit Reset and work again towards a better submission and then repeat again every day.

Finally, We got to 385/1316 on Private LB: Our ideas on the given compute allowed to get to just this rank.

It was definitely an amazing experience, I’d definitely compete more and try to perform better.

On many occasions, when we’d manage to land a Top 100 submission, after sleeping soundly-we’d wake up to a public kernel that would have completely thrown us down the LB by 50 positions!!

People who compete in competitions are truly passionate, there is always an overflow of ideas, always an overflow of talent.

For us, it was just giving it our best shot and living for a few weeks on minimal sleep and breaking and building conda environments while trying to convert the ideas shared in the discussions into code.

In the end, I really learned why Kaggle is the Home to Data Science.

Goal

It was always a dream goal of mine to perform well in kaggle competitions. For starters, I had kept the bar at 2 Top 25% submissions.

Doodle Challenge:

Even though the competition had a large number of file size and a few interesting challenges, we were aiming our best for a medal.

Competition

Goal: The goal is to identify doodle(s) from a dataset of CSV(s) files containing the information to “draw” or create them.

Challenge is: The number of files in the challenge was ~50 Million!

Personally, I had already done a LARGE number of MOOC(s) and I was confident given that how many times I had gone over the definition of a CNN, it’d be pretty easy to land a bronze medal. Of course, I was very wrong.

Things that did not work

We’re very grateful to Kaggle Master Radek Osmulski for sharing his Fast.ai starter pack-we built on Top of it along with a few tricks from Grandmaster Beluga.

Mistake 1: As beginners, its always suggestive to start a kaggle competition when the competition is fresh.

Why?

The number of ideas that overflow in a competition is just huge! Even the LB Top scorers are very generous with sharing their approach-it’s just a matter of if we could figure out the missing nuggets of information and be able to convert their ideas into code.

We started the competition almost past mid-way since its launch.

Mistake 2: We learned about “experimenting”, validation.

The starter pack works on 1% of the data, I tried experimenting with 5% of the data, followed by 10% of the data and this had shown a consistent increase in performance.

Next: I decided to throw all the data at the model, the training ran for ~50 hours on my 8 GB GPU. We had expected a Top score with this approach and instead the model accuracy fell to the floor!

Lesson learned: Experiments are very important and so is validation. The starter pack relies on “drawing” the images into memory and then training the model on them. The issue with Linux is, it keeps a limit at the number of files unless specifically formatted against that.

We didn’t do a validation check and trained against a portion of the data. A reflective validation set is certainly something that you must install while getting started.

Ideas that worked

Progressive Training and re-sizing:

- Training the Model on 1% of the data with 256 image size.

- Fine-Tuning the Model to 5% of the data with 128 image size.

- Further, Fine-Tuning the Model to 20% of the data with 64 image size.

- ResNet18<ResNet34<ResNet50<ResNet 152 when trained on the same portion of the dataset.

This approach showed a consistent increase in performance.

Ensembling: Our best submission was a “Blend” of a ResNet 152 trained with fastai (v1) on 20% of the dataset and the MobileNet Kernel by Kaggle GM Beluga.

Summary

You will find many better discussions in the competition discussions, so please excuse this post if these ideas don’t get you a Top LB score.

This was really a personal summary of the things that I’ve learned competing.

Finally, I’ve pledged to compete a lot more, hopefully, you’ll find team “rm-rf /” somewhere on the LB in the recent competitions.

I’ve been bitten by the “Kaggle Bug” and I would probably prefer competing in the future over signing up for more MOOC(s)

See you on the LB, Happy Kaggling!

You can find me on twitter @bhutanisanyam1

Subscribe to my Newsletter for updates on my new posts and interviews with My Machine Learning heroes and Chai Time Data Science